Intuitive, Interactive Beard and Hair Synthesis With Generative Models

CVPR 2020 (Oral Presentation)

Kyle Olszewski, Duygu Ceylan, Jun Xing, Jose Echevarria, Zhili Chen, Weikai Chen, and Hao Li

University of Southern California Adobe Research

USC Institute for Creative Technologies

Abstract

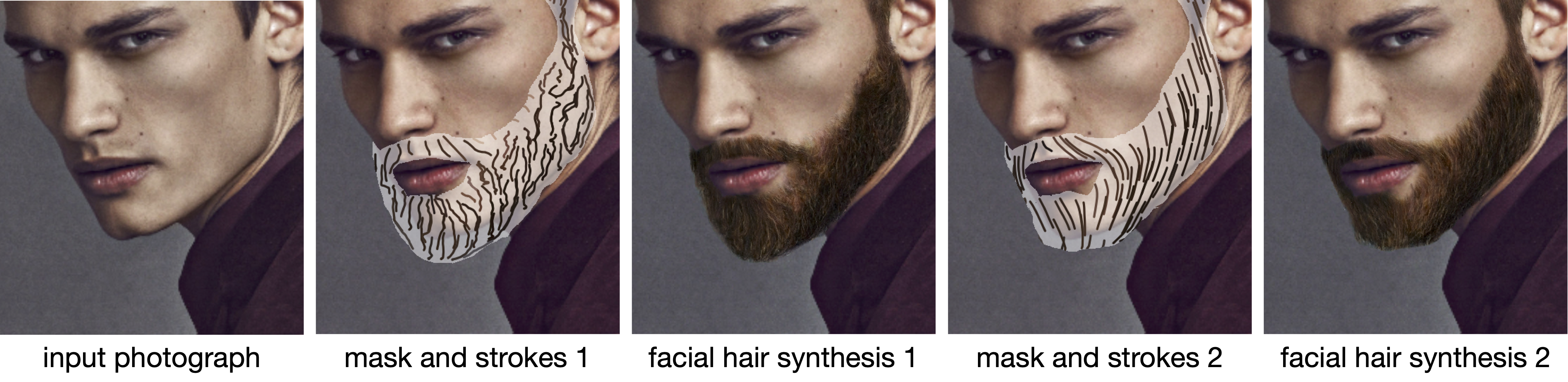

We present an interactive approach to synthesizing realistic variations in facial hair in images, ranging from subtle edits to existing hair to the addition of complex and challenging hair in images of clean-shaven subjects. To circumvent the tedious and computationally expensive tasks of modeling, rendering and compositing the 3D geometry of the target hairstyle using the traditional graphics pipeline, we employ a neural network pipeline that synthesizes realistic and detailed images of facial hair directly in the target image in under one second. The synthesis is controlled by simple and sparse guide strokes from the user defining the general structural and color properties of the target hairstyle. We qualitatively and quantitatively evaluate our chosen method compared to several alternative approaches. We show compelling interactive editing results with a prototype user interface that allows novice users to progressively refine the generated image to match their desired hairstyle, and demonstrate that our approach also allows for flexible and high-fidelity scalp hair synthesis.

Spotlight video

Citation

@InProceedings{Olszewski_2020_CVPR,

author = {Olszewski, Kyle and Ceylan, Duygu and Xing, Jun and Echevarria, Jose and Chen, Zhili and Chen, Weikai and Li, Hao},

title = {Intuitive, Interactive Beard and Hair Synthesis With Generative Models},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}